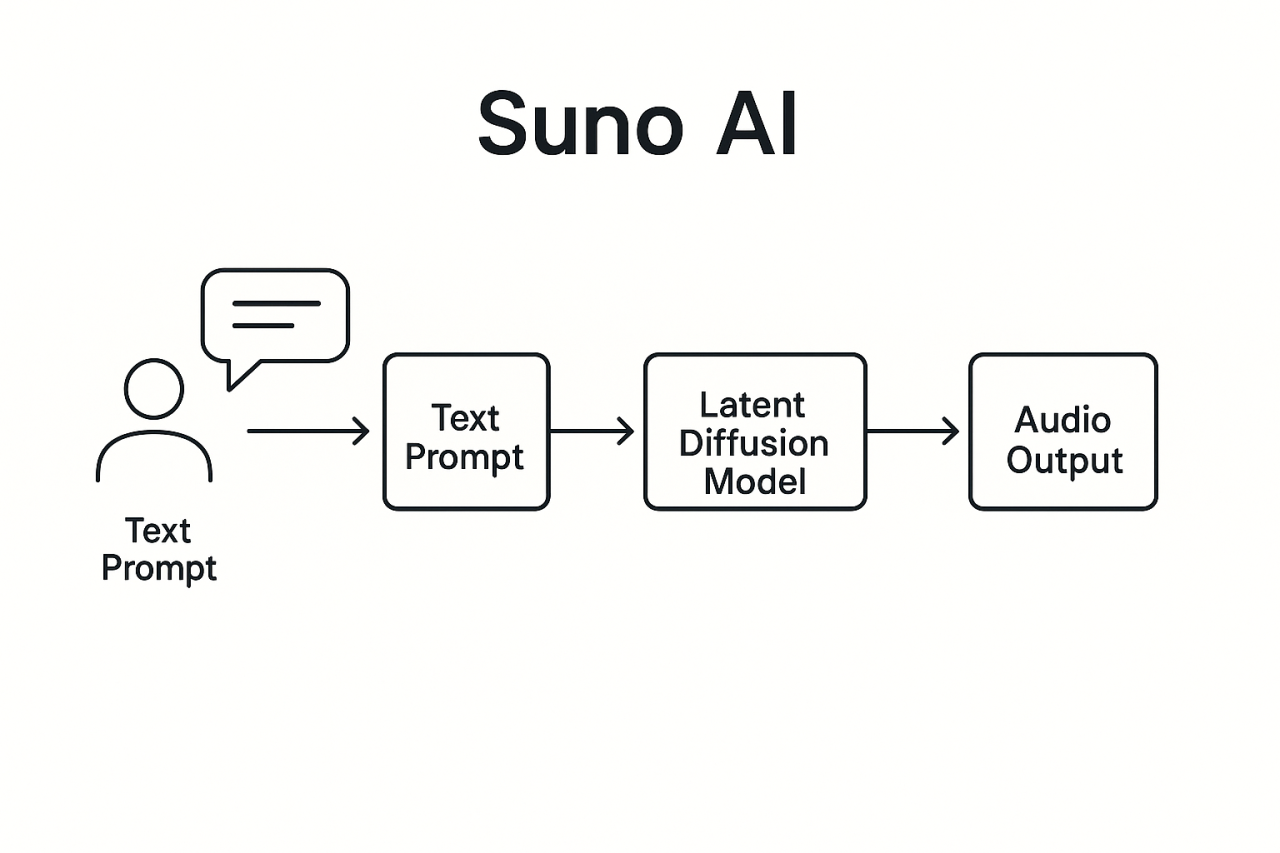

Optimizing your Suno AI prompts can transform your AI music generation from a novelty into a professional-grade music production workflow. By mastering advanced techniques such as global parameter tuning, section tagging, instructional modifiers, and meta tags, you’ll gain precise control over structure, dynamics, and sonic texture. This deep-written article will walk you through each element—from setting the foundation to crafting intricate arrangements—complete with vivid, concrete examples.

Apr 29 2025 • 24 min read • 4731 words

Comprehensive Guide to Advanced Suno AI Structuring & Prompt Techniques

- Global Parameters

- Section Tags

- Instructional Modifiers

- Meta Tags Categories & Examples

- Advanced Instrumentation & Arrangement

- Prompt Structuring Tips

- Letter-Case Optimization

- Using the Lyrics Field vs. Style Field

- Seed-Reuse via Timestamps

- Ending Songs Naturally

- Specifying Chord Progressions

- Defining Vocal Styles

- Advanced Song Examples

Global Parameters

Establishing clear global parameters is the critical first step for any successful AI music production session with Suno AI. Think of these parameters as the frame around your creative canvas: they set the track title, define the genre, lock in the tempo, and establish the overall style. Without these anchors, Suno AI’s generative engine may wander, producing unfocused or inconsistent results.

When you begin a new project in Suno AI, always start your prompt with a [Title: …] tag. Naming your track isn’t just cosmetic; it helps the model treat your prompt as a cohesive work rather than a random collection of cues. For example, [Title: Neon Reverie] primes the AI to generate a cinematic, futuristic soundscape.

Next, specify your [Genre: …]. You can mix multiple styles—[Genre: Electronic, Hip-Hop, Drill, Rap]—to push the boundaries of conventional classification. Including multiple genres not only fuels creative fusion but also signals Suno AI to incorporate hybrid instrumentation and production techniques. For instance, an “Electronic Drill” prompt might blend 808 bass with syncopated hi-hat rolls and glitchy synth textures.

Tempo is arguably the heartbeat of your track. Use [Tempo: … BPM] to fix the pace. A [Tempo: 95 BPM] tag guides Suno AI toward a laid-back, chill vibe ideal for lo-fi or downtempo productions, while [Tempo: 140 BPM] is perfect for high-energy genres like drum & bass, trap, or fast-tempo EDM.

Finally, encapsulate the mood with [Style: …]. The style tag sets the emotional palette: it might read [Style: Aggressive, Futuristic, Gritty] for a dark cyberpunk aesthetic, or [Style: Laid-back, Melodic, Atmospheric] for a soulful, ambient track. By combining emotive adjectives, you ensure that Suno AI shapes the instrumentation, mixing, and even the AI-generated vocals to match your desired sonic character.

By carefully defining these four global parameters—Title, Genre, Tempo, and Style—you give the AI clear guardrails. This structured approach reduces guesswork, accelerates iteration, and elevates your AI-driven music production from random experimentation to reliable, reproducible creativity.

Section Tags

Section tags are the architectural blueprint of your song, enabling flow control, dynamic variation, and precise editing. When you wrap lyric lines or instrumental cues in brackets such as [Intro], [Verse], and [Chorus], you guide Suno AI to treat each segment differently, resulting in a polished arrangement with coherent transitions.

Why Section Tags Matter

First, section tags create flow control: they ensure your song progresses naturally rather than jumping from one idea to another. By explicitly marking an [Intro], you alert Suno AI to generate an opening that sets the atmosphere—whether it’s a simple piano riff, an ambient pad, or glitchy synth pulses. Similarly, labeling a [Bridge] gives the model permission to deviate from your verse-chorus loop and introduce new melodic material or a contrasting texture.

Second, section tags enable dynamic variation. When you assign different moods and intensities to each segment—perhaps [Verse] is haunted with whispered vocals and sparse guitar, while [Chorus] is full-bodied with soaring strings and pounding drums—the track stays engaging. This deliberate contrast prevents listener fatigue and elevates your AI music generation by giving each part its unique identity.

Third, once you have the template of your sections, reproducibility and editing become straightforward. If you decide to extend the chorus or tweak the instrumental break, you can adjust only that section’s tags and content without rebuilding the entire prompt from scratch. This modular approach mirrors real-world music production workflows in a DAW, where isolated edits save time and maintain consistency across iterations.

Real-World Example

Imagine you want a cinematic electro-goth track:

[Intro] Mournful cello melody with sub-bass drones thunderclap FX

[Verse] Haunting whispered lyrics: “In the shadows we roam” {reverb: long tail}

[Pre-Chorus] Rising synth arpeggios, tension mounting (softly: “Feel the pulse…”)

[Chorus] Power chords and choir pads unite drum fill: crash into drop

[Bridge] Instrumental break with guitar solo distorted feedback swoosh

[Outro] Echoing piano motif fading on distant winds vinyl crackle

Here, each tag instructs Suno AI precisely how to handle transitions and textures. The intro’s cello opens the narrative, the verse’s whispered lyrics set a mood, and the chorus unleashes full instrumentation. When you revise, you might change only the [Bridge] to incorporate a piano interlude, leaving verse and chorus intact.

In summary, section tags are indispensable for any serious Suno AI user. They bring clarity to AI music generation, empower dynamic variety, and streamline prompt revision—ultimately resulting in cohesive, structured, and engaging tracks.

Instructional Modifiers

Instructional modifiers—parentheses ( ), braces { }, and asterisks * *—unlock performance nuance, layered effects, and specialized sonic cues in AI music generation. By weaving these modifiers throughout your prompt, you transform a flat string of instructions into a rich, descriptive score that Suno AI can interpret in detail.

How Instructional Modifiers Enhance AI Music Parentheses ( ) are ideal for whispering secret instructions or ad-lib cues. For example, (whispered: "Under the moonlight") tells Suno AI to layer a whispered vocal track beneath your main melody, adding intimacy and texture. You can also use parentheses for emphasis—(LOUD: "RISE UP!") or (softly: "fade away…")—which influences the AI’s vocal mixing and delivery.

Braces { } serve to introduce backup vocals, harmonies, and instrumental layers. A directive like {backup vocals: "ooh-ooh"} instructs Suno AI to sprinkle a background choir behind your lead line, while {layer: "sub-bass for extra depth"} can thicken your low end. Effect commands such as {effect: "robotic modulation"} or {auto-tune: "subtle"} ensure specific processing is applied to your vocals or instruments.

Asterisks * * are your go-to for sound effects, ambient textures, and dynamic transitions. Phrases like laser sweeps or record scratch prompt Suno AI to embed these sonic elements directly into the track. Environmental cues—rainfall in the background or crowd cheer—enrich your production with realistic field recordings or synthesized approximations.

Example in Practice Suppose you want a darkwave pop song with suspenseful tension:

Premium content

Log in to continue

Meta Tags Categories & Examples

Meta tags are the cornerstone of prompt engineering in Suno AI. These structured directives guide the AI’s interpretation of musical form, dynamics, instrumentation, and vocal characteristics. When used effectively, meta tags transform your text prompt into a detailed musical blueprint, ensuring consistent, high-quality outputs.

Why Meta Tags Matter

Precision is the hallmark benefit of meta tags. By clearly specifying [Genre: Rock], [Mood: Melancholic], or [Instrument: Piano], you eliminate ambiguity, steering Suno AI toward your exact vision. This reduces wasted generations and saves valuable credits.

Flexibility is another advantage: you can combine multiple tags to create complex fusions. For instance, writing [Genre: Jazz Fusion] [Mood: Groovy] [Instrument: Sax, Electric Piano] instructs the AI to merge jazz improvisation with electronic textures, yielding a truly hybrid sound.

Readability is often overlooked but crucial for collaboration and iteration. A well-structured prompt with meta tags is easier to edit, share, and reproduce. You and your team can quickly identify which tags control form versus which dictate tone, streamlining the creative process.

Meta Tag Categories

Song Structure Tags: These tags frame each section. Include [Intro], [Verse], [Chorus], [Bridge], and [Outro] to delineate your track’s flow. Structural clarity prevents generative drift and maintains narrative coherence.

Genre, Mood & Instrumentation Tags: Direct the AI on stylistic elements. Tags like [Genre: Ambient] [Mood: Ethereal, Floating] [Instrument: Synth Pads, Chimes] conjure a dreamy soundscape, while [Genre: Heavy Metal] [Mood: Dark, Aggressive] [Instrument: Distorted Guitar, Double Bass Drums] delivers head-banging intensity.

Vocal Customization Tags: Control vocal performance and processing. Examples include [Vocalist: Female] [Harmony: Yes] [Vocal Effect: Echo], which instructs Suno AI to layer female harmonies with spacious echo. Alternatively, [Vocalist: Male, Baritone] [Vocal Effect: Reverb, Delay] yields a deep, resonant lead voice.

Dynamic Tags: Tags such as [Dynamic: Crescendo] and [Dynamic: Forte] shape loudness contours, guiding the AI to build or soften sections dynamically. This elevates emotional impact and structural drama.

Concrete Example Imagine you’re aiming for a cinematic rock ballad that evolves into an epic orchestral finale. Your prompt might read:

Premium content

Log in to continue

Advanced Instrumentation & Arrangement

Sophisticated instrumentation and arrangement are what separate a rough AI demo from a polished, professional track. By layering complementary sounds, crafting dynamic progressions, and integrating complex rhythms, you can push Suno AI’s generative engine to produce rich, multi-dimensional compositions.

Layering for Depth and Emotion Effective layering begins with choosing instruments that occupy distinct frequency bands. Pairing warm strings with acoustic guitar creates a natural resonance: the strings fill the midrange while the guitar provides harmonic support. Adding ethereal pads over this duo introduces cinematic depth, making the track feel expansive and emotional.

For example, in a heartfelt indie anthem, you might begin with a simple fingerpicked guitar motif, then introduce a *layer: "ambient pad under chords" via braces. Next, you add {layer: "sub-bass for punch"} to ground the low end. This layering technique yields a fuller sound than any single instrument could achieve alone.

Dynamic Progressions & Variations Dynamics are essential for maintaining listener engagement. Use tags like [Dynamic: Crescendo] to gradually build intensity, perhaps adding snare rolls and string swells over several bars. Conversely, [Dynamic: Decrescendo] can soften a section before a dramatic drop or bridge, creating emotional contrast.

Call-and-response techniques also invigorate arrangements. For instance, you might write:

[Verse] Lead synth riff echoes → {counter-melody: light piano arpeggio} [Chorus] Choir pads respond to vocal hook → {layer: "electric guitar stabs"} This interplay keeps the track unpredictable and engaging, much like a conversation between instruments.

Complex Rhythmic Structures

Polyrhythms and tempo modulation introduce further sophistication. Embedding a 3/4 melody over a 4/4 kick drum pattern produces a syncopated feel that adds tension and interest. You can prompt:

Premium content

Log in to continue

Prompt Structuring Tips

Crafting a Suno AI prompt is as much an art as composing the music itself. The way you structure your prompt dramatically influences how the AI interprets your creative vision. A well-organized prompt offers clarity, minimizes misinterpretation, and ensures that Suno AI produces compositions aligned with your expectations.

One of the most effective strategies is to leverage Suno AI’s Custom Mode alongside detailed descriptors. Rather than stuffing everything into a single “Style” field, switch to Custom Mode where you can label each attribute. For example, starting your prompt with “Genre: Heavy Metal, Mood: Dark, Key: B minor” separates the core musical style from emotional context and tonal center. This prevents the AI from confusing lyrical content with stylistic instructions, an issue often encountered when prompts become ambiguous.

Equally important is the use of commas to divide different attributes clearly. In a prompt like “Gothic, Alternative Metal, Ethereal Voice”, each element stands on its own, giving Suno AI distinct cues for genre, subgenre, and vocal character. In contrast, a prompt lacking separators—“Gothic Alternative Metal Ethereal Voice”—can lead to jumbled results as the model struggles to discern where one instruction ends and another begins.

Brackets remain an indispensable tool even outside of section tags. If you want to invoke a particular vocal style mid-track, embedding “[Verse1] [Female Ethereal Voice]” within your lyrics field guides the AI to switch to an ethereal female lead at the start of your first verse. Over time, you’ll discover that precise placement of these bracketed tags can influence everything from instrumentation to mixing choices.

Remember, Suno AI thrives on specificity. A prompt that crisply defines genre, mood, tempo, and section directives will yield more consistent outputs than a vague instruction. However, avoid overloading the AI with excessive detail in a single go. If your prompt grows unwieldy, break it into iterative generations: start with broad strokes to establish the musical backbone, then refine subsequent prompts to layer in nuanced effects and advanced dynamics. By thoughtfully structuring your prompts with clear separators, bracketed cues, and targeted descriptors, you’ll turn Suno AI into a responsive collaborator that faithfully brings your musical ideas to life.

Letter-Case Optimization

Believe it or not, the way you capitalize words in your Suno AI prompts can significantly affect the AI’s output. Letter-case optimization is a subtle yet powerful technique to communicate relative priorities within your prompt. By consistently applying uppercase, title case, and lowercase conventions, you can steer Suno AI’s attention toward the most critical elements of your composition.

In practice, reserve ALL CAPS for the most important tags—typically your genres. When you write “[Genre: EDM, HIP-HOP, TRAP]”, the uppercase signals to Suno AI that these are foundational style elements. The model tends to weight uppercase text more heavily, so ensuring your primary genre descriptors are in all caps will lock in the core identity of your track.

Use Title Case for secondary descriptors such as moods, sub-genres, or special effects. For instance, “[Mood: Dark Ambient, Cinematic]” and “[Instrument: Electric Guitar, Synth Pad]” in title case signal that these attributes are important, but not as paramount as your all-caps genres. Title case occupies a middle priority level, guiding the AI to respect these instructions without overshadowing your primary style.

Finally, deploy lowercase for tertiary elements—particularly individual instruments or production nuances. A prompt like “[drums: punchy, tight] [bass: deep sub-bass]” uses lowercase to indicate fine-grained details that should be incorporated but don’t define the overall sound. This hierarchical capitalization system helps Suno AI parse your prompt in layers, mirroring how a human composer might approach a score by first choosing a genre, then establishing mood, and lastly refining instrument textures.

To illustrate, compare the following two prompts. In the first, inconsistent capitalization blends all instructions equally:

[Genre: Electronic, hip-hop, Trap] [mood: High-energy] [Instrument: Synth Leads, ambient pad]

In the second, optimized version, priorities are clear:

[GENRE: ELECTRONIC, HIP-HOP, TRAP] [Mood: High-Energy] [instrument: synth leads, ambient pad]

The second prompt ensures that the AI places primary emphasis on the three genres, recognizes the high-energy mood as a secondary attribute, and treats specific instrument colors as final flourishes. By mastering letter-case optimization, you’ll unlock a new dimension of control over Suno AI’s interpretive algorithms, resulting in tracks that better match your creative intent from the very first generation.

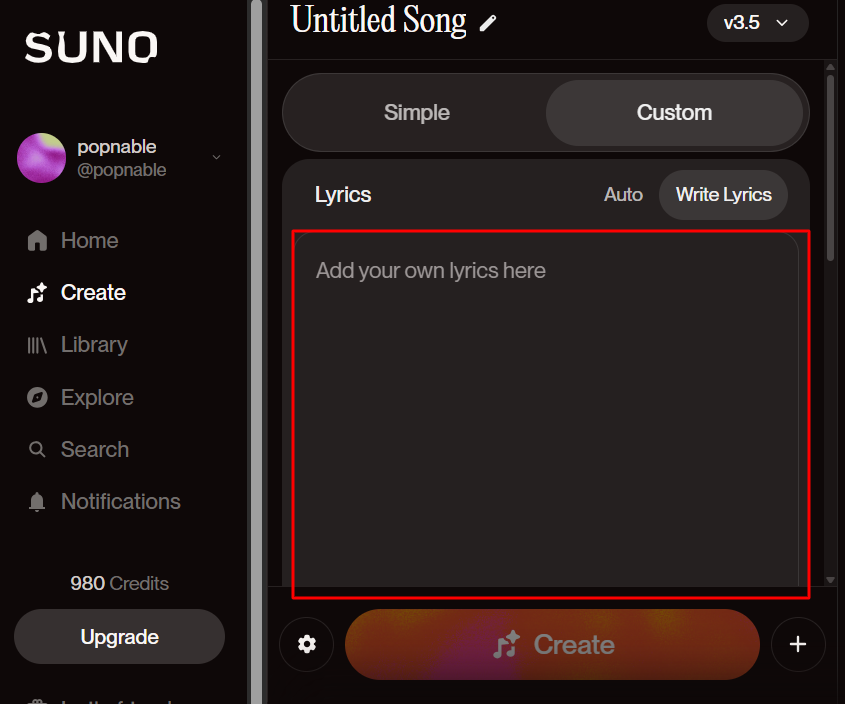

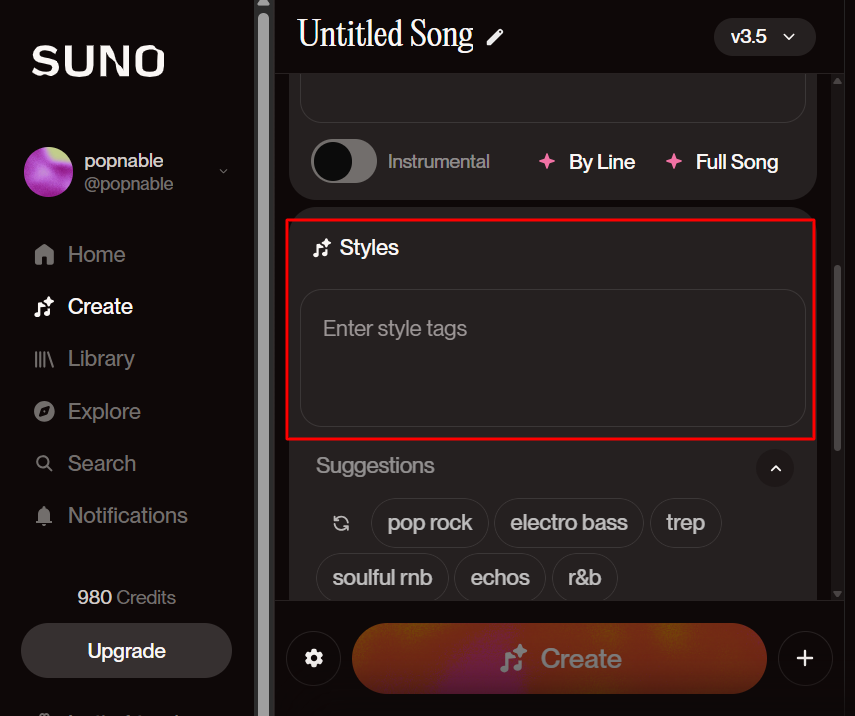

Using the Lyrics Field vs. Style Field

Suno AI’s interface divides your creative input into two distinct sections: the compact Style Field (limited to 120 characters) and the more expansive Lyrics Field. Understanding when and how to use each field is essential for efficient prompt engineering and consistent results.

The Style Field is designed for broad, song-wide instructions. It’s the perfect place to declare your track’s genre, overall vibe, and basic instrumentation. For example, entering “Lo-fi Chillhop, Downtempo, Female Vocals” here gives Suno AI a quick snapshot of your musical vision. However, the brevity constraint means that any profound structural or dynamic directions will likely be cut or misinterpreted if forced into this field alone.

By contrast, the Lyrics Field offers ample real estate for detailed meta-tagging, sectional cues, and performance notes. Even if you’re creating a purely instrumental track, populating the Lyrics Field with bracketed tags and modifiers can dramatically enhance your output. For instance, typing “[Intro] clean guitar arpeggio soft vinyl crackle” or “[NO VOCALS] [NO VOCALS] [NO VOCALS]” directly tells Suno AI to suppress unintended singing and focus on instrumental purity.

Seasoned Suno AI users often employ the Style Field and Lyrics Field in tandem. They start with a concise Style Field entry—“Ambient, Ethereal, 60 BPM”—to anchor the AI’s high-level approach. Then, they shift to the Lyrics Field to architect the song in layers: section tags, dynamic modifiers, and explicit instrument instructions. This two-step process ensures that Suno AI never loses sight of your overall aesthetic while still executing precise, part-by-part compositional details.

Another best practice is to avoid redundancies. If you’ve already specified your tempo and genre in the Style Field, there’s no need to repeat them verbatim in the Lyrics Field. Instead, use that space for advanced instructions like “[Bridge] risers build for 8 bars (softly: ‘breathe with me’)”. By respecting the distinct roles of the Style and Lyrics fields, you’ll maintain clarity, save time, and produce cohesive tracks that faithfully capture your creative vision every time.

Seed-Reuse via Timestamps

One of Suno AI’s most powerful yet underutilized techniques is seed-reuse via timestamps. By anchoring new generations to a specific point in a prior track, you can preserve desirable musical elements—such as vocal timbre, instrumental arrangement, or production texture—while exploring fresh lyrical or melodic ideas.

Here’s how it works: after generating a track that features an appealing intro or instrumental hook, note the timestamp at which that section begins—say, 2.5 seconds. When starting a new session, enter that timestamp in Suno AI’s seed-reuse setting. This instructs the AI to initialize the generative process from the previously generated waveform at exactly 2.5 seconds, effectively carrying over the same vocal voice, band setup, or instrumental palette.

In practice, this means you can maintain a consistent sonic identity across multiple generations. For example, you might love the drum groove and vocal tone in your first take but want to experiment with alternative chord progressions or lyrical themes. By seeding at the beginning of your favorite riff, Suno AI retains the instrumental and vocal characteristics you enjoyed, while allowing you to reimagine the subsequent musical narrative.

Seed-reuse is particularly valuable when crafting themed EPs or album sides. If you’d like all tracks to share a unified sonic fingerprint—whether that’s a distinct guitar amp distortion or a specific vocal processing chain—simply reuse the same timestamp seed for each generation. You’ll get variety in composition but coherence in production, creating a professional-sounding collection that feels purposefully connected.

Of course, effectiveness can vary by genre and AI iteration. Experiment with different seed points—sometimes the sweet spot is later in the track, like the onset of a chorus at 15 seconds. And be prepared to run multiple generations; subtle tweaks in your prompt may be needed to blend the old and new elements seamlessly. But when mastered, seed-reuse via timestamps is a game-changing technique for maximizing both creative exploration and sonic consistency in your Suno AI music productions.

Ending Songs Naturally

A common frustration for Suno AI users is the abrupt cut-off at the end of their tracks. Fortunately, you can craft prompts that signal the AI to conclude your song gracefully—whether you desire a clean ending, a slow fade-out, or an elaborate outro sequence.

For a straightforward conclusion, include a simple [end] tag at your desired stopping point. This tells Suno AI to terminate the music cleanly, as though you pressed “Stop” on your DAW. If you prefer a more cinematic exit, use [fade out]. Although fade-outs can yield mixed results, they often coax Suno AI into gradually reducing instrumentation volume across several bars, creating the sensation of trailing off into silence.

For even greater control, chain tags together: [outro] [Instrumental Fade out] [End] invites Suno AI to craft a dedicated outro section with fading instruments before final silence. You might specify that your outro features a lone piano motif by writing “[outro] Solo Piano [Instrumental Fade out] [end]”, guaranteeing that the final moments spotlight your chosen instrument.

Despite these advanced tags, AI interpretations can still occasionally misfire. In such cases, manually trimming or applying fade-outs in a DAW like Audacity or Ableton Live remains a reliable fallback. By editing out any lingering unwanted loops, spoken words, or errant samples, you can sculpt a polished ending that matches your artistic intent.

To minimize manual intervention, experiment with slight variations—sometimes changing the phrasing to [Song Ends] or [Stop Music] yields more consistent fade behaviors. Keep detailed notes on which commands work best for your genre and vocal style, and build a personal library of proven ending strategies. With thoughtful prompt design and a few fallback techniques, you’ll ensure that every Suno AI track finishes as elegantly as it began.

Specifying Chord Progressions

While Suno AI excels at generating melodies, guiding it to follow a precise chord progression can require some creative prompt engineering. By clearly labeling chord changes and linking them to mood descriptors, you can coax the AI into honoring your harmonic roadmap rather than inadvertently composing lyrical references to chord names.

First, switch into Custom Mode so that Suno AI knows to treat your chord annotations as musical instructions rather than lyrical content. You might write:

“Style: instrumental trance in A minor Lyrics: [Am] [F] [G] [Em]”

By explicitly separating Style from Lyrics, you signal that [Am] [F] [G] [Em] are chord progression markers, not words to be sung. If the AI still hesitates, prefix your progression with “Chord progression:”—for example, “Chord progression: [Am] [F] [G] [Em]”—to drive the point home.

Another valuable tactic is to associate mood with scale: tagging “Mood: sad” can encourage Suno AI to embrace the minor key feel inherent in your target progression. Mood descriptors such as “melancholic,” “yearning,” or “eerie” further reinforce the emotional foundation of minor chords, making it likelier that the AI will weave those chords organically into the composition.

Experiment with providing chord transitions at section boundaries too. Placing [Chorus] Chord progression: [Am] [F] [G] [Em] ensures that your hook section follows the intended harmonic sequence. If you want a more complex change-up, preface it with a pre-chorus tag: “[Pre-Chorus] Chord progression: [Dm] [Am] [Em] [G]”. Over successive generations, Suno AI will learn to treat these bracketed progressions as essential structural elements rather than optional style cues.

Remember, achieving perfect adherence may require iterative tweaking: adjust the order of mood, style, and chord tags, or try synonyms for “chord progression” like “harmony sequence.” With persistence and precise labeling, you’ll empower Suno AI to follow your chord roadmap, creating compositions that resonate with your intended harmonic character.

Defining Vocal Styles

Getting the right vocal character from Suno AI can elevate a track from “machine-generated” to “human-feeling.” By employing detailed descriptors and combining them thoughtfully, you can guide the AI toward unique vocal tones—whether you want a gritty male rap delivery, a soaring operatic soprano, or an entrancing ethereal whisper.

Start by specifying gender and range: [Vocalist: Male, Baritone] contrasts with [Vocalist: Female, Soprano], each cueing different pitch registers and timbral qualities. To add texture, include adjectives like “gritty,” “airy,” or “velvety,” producing tags such as “[Vocalist: Female, Airy, Background]” or “[Vocalist: Male, Gritty, Lead].” Suno AI responds to these layered descriptors by modulating breathiness, vocal growl, and tonal warmth accordingly.

Geographic distinctions can also powerfully shape vocal style. Tagging [Vocalist: UK Rock Male] or [Vocalist: Nashville Country Female] signals regional accents, idiomatic phrasing, and stylistic inflections—ensuring that your vocal performance reflects the conventions of the genre’s birthplace. This localization trick is especially effective for world music fusions, where authenticity in vocal delivery can make your AI track stand out.

Don’t hesitate to experiment with combinations. For a haunting choral effect, you might write [Vocalist: Female, Soprano] [Harmony: Yes] [Vocal Effect: Reverb, Delay], prompting Suno AI to layer multiple soprano voices with spacious effects. Conversely, a raw, lo-fi hip-hop aesthetic could use [Vocalist: Male, Rap] [Vocal Effect: Distortion] [Dynamic: Forte] to produce an aggressive, overdriven vocal hook.

Repeated trials and incremental adjustments are key. Note which descriptors yield the most natural-sounding output, and refine your vocabulary accordingly. Over time, you’ll build a personal lexicon of successful vocal tags that reliably produce the tone, character, and emotional depth you’re seeking—turning Suno AI into your own virtual choir or rap collaborator.

Advanced Song Examples

To illustrate how all these techniques coalesce into complete compositions, let’s examine three fully fleshed-out Suno AI prompt scenarios. Each example showcases different genres, structures, and advanced formatting tricks, demonstrating how you can apply the strategies above in real-world AI music production.

By integrating global parameters, section tags, instructional modifiers, meta tags, advanced layering, and detailed vocal descriptors, you can unlock the full power of Suno AI for professional, reproducible, and deeply expressive music creation. Follow these comprehensive guidelines, experiment boldly, and watch as Suno AI transforms your structured prompts into dynamic, polished compositions that rival traditional studio productions.

Premium content

Log in to continue

You might like

How to Generate AI Lyrics for SunoAI Using ChatGPT

Apr 29 2025

Mastering Style Tags in Suno AI (2025): The Ultimate Guide to Custom Music Generation

Apr 30 2025

AI Music and Copyright Explained: What Suno AI Users Must Know

Apr 28 2025

I compared Suno v4.5 vs. v4 – Here’s The Result

May 04 2025